Split testing website marketing messages with Minitab’s Chi-Square % Defective comparison tests

Six sigma techniques have expanded beyond traditional manufacturing companies, into healthcare, movie industry, government and now online marketing.

Split testing (also called A/B testing) is one of the more popular techniques being used today by website developers, programmers and any company with an online presence.

Split testing describes a comparison test that is used to determine what works best on their website, by showing their site visitors different images, colors, phrases, layouts, etc and tracking which ones get the most “interest”. Interest can be measured by time spent on a website, percent of clicks on a link or ad, website load time, and even tracking of cursor scrolling patterns.

For example, a website might want to determine what gets the most clicks, a button that says “Free Sign Up” (option A), or “Register” (option B).

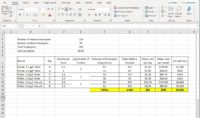

After enough visitors arrive on the site (sample size is adequate), a comparison of the click rate is reviewed to determine which one did the best. The click rate is defined as the percentage of site visitors that clicked on the button divided by the total number of visitors who saw that option.

In the image above, you can see that 78% of the visitors clicked on the button when it said “Free Sign Up,” but only 34% of the visitors clicked on “Register.”

The click rate that is higher would be selected (“Free Sign Up”), and the other option is dropped (or modified again to run another A/B test).

Essentially, split (A/B) testing is a simplified hypothesis test or design of experiments (DOE).

In our example, assuming I had more than 100 site visitors, it is pretty obvious that the difference in the percentage is most likely statistically significant (not due to random chance). However, there may be situations where the percentages will be much closer together. In addition, what if we want to run an A/B/C test (3 options instead of only 2)?

Since the primary metric in split testing is a click rate (proportion), analyzing the split test cannot be done with an Analysis of Variance (ANOVA). Therefore, you either need to run a 2-sample proportions test on each comparison (A vs B, B vs C, and A vs C), or you can try Minitab’s Chi-Square % Defective analysis.

We recently performed a split test on our website, using 3 different messages at the top of our website. The top bar in green is a service we used called Hello Bar, which is free for use (one website only).

The three options we used for our message, along with the background color:

- A: “Check out all the FREE downloads and information” (Blue)

- B: “5S and Control Chart SPC templates for FREE” (Gold)

- C: “FREE Lean and Six Sigma Excel Templates” (Green)

After a month of displaying these 3 different messages to our site visitors (done automatically by Hello Bar), we reviewed the data.

- A: “Check out all the FREE downloads and information” (Blue) = 0.5% click rate

- B: “5S and Control Chart SPC templates for FREE” (Gold) = 0.8% click rate

- C: “FREE Lean and Six Sigma Excel Templates” (Green) = 1.6% click rate

From the data, it seems that the last option performed the best. If I was a betting person, I would have predicted the 2nd option (B), but that’s why we collect data to make decisions! In addition, as Six Sigma practitioners, we must also ask if these differences are statistically significant, or could the change with more samples?

Using Minitab’s Assistant function (highly recommended when unsure what tests to perform), select Assistant –> Hypothesis Tests…

Next, we decide that we want to “Compare two or more samples” (since we are running an A/B/C test). Under that section, since we have proportions (click rate) instead of measurement data (like website speed, time spent on website, etc), we select “Chi-square % Defective”

By the way, if you were running only an A/B test, then you would select “Compare two samples with each other” then select “2-sample % Defective”

On the next screen, we tell Minitab what our options should be assigned to in the worksheet. Under the X column, select “Style” (or whatever field you are using to identify your options).

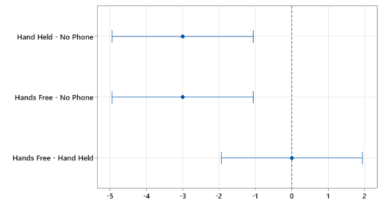

If you start in the upper left, the p-value is 0.031, which is less than 0.05, so we conclude that at least one option is statistically different than one of the other options. The upper right section tells us that the statistical difference occurs between A and C. What it also tells us is that option B is not statistically better than option A, nor is it statistically worse than option C. Therefore, we should definitely drop option A from our Hello Bar message, but we need more data to conclude whether option C is statistically better than option B.

Does that mean the message seems to make a difference? Maybe, because if you were paying attention, we also had different colors for each message, so we have confounded our results with color. Maybe site visitors are not clicking as often due to the color, not the words. Sounds like more split testing is needed!

Split testing is a great tool that has brought Six Sigma analysis into the internet marketing world. I plan to use the term “split testing” or “A/B testing” instead of DOE or experimentation when talking to more tech-savvy audiences.

Have you tried split or A/B testing on a website? Explain what you did in the comments below…